Subscribe to NewscastStudio for the latest news, project case studies and product announcements in broadcast technology, creative design and engineering delivered to your inbox.

Fox Sports‘ redo of Studio A in Los Angeles represents an evolution of the network’s design and technology integration, bringing new, eye-catching elements that let it create its trademark standout looks.

“This was something that has never been done before at this scale on a live broadcast, said Zac Fields, the division’s senior vice president of graphic technology and integration. “Because of this, much of the workflow is custom. We spent a lot of time doing demos and tests proving out various features.”

In many ways, this project personifies the Fox Sports way of creating bold looks that take risks — all in the name of bringing an unmatched experience to viewers’ TVs.

The project required collaboration between house engineers, in-house software engineers, artists and vendors, including the design team at Girraphic, who handled virtual set extensions, video wall and volume graphics and extended mixed reality features.

Fox Sports Creative Services created some of these elements, and Girraphic was tasked with using its expertise to get the designs into Vizrt and Unreal — while also designing elements from the ground up.

This process started with design meetings that covered all of Fox’s goals and vision for the space.

“Our team explored how these segments were already run and what their current look was, and what kind of fun tools we could create to help bring the segments to life. We wanted to ensure that these segments still felt familiar and usable not only to the Fox control room and on-air talent, but also the Fox NFL fans,” explained Girapphic CEO Nathan Marsh.

A Fox executive vice president, Gary Hartley, reached out to Girraphic in January 2022 and the team headed to L.A. to see what scale the Fox team had in mind.

Fox’s NASCAR studio in Charlotte.

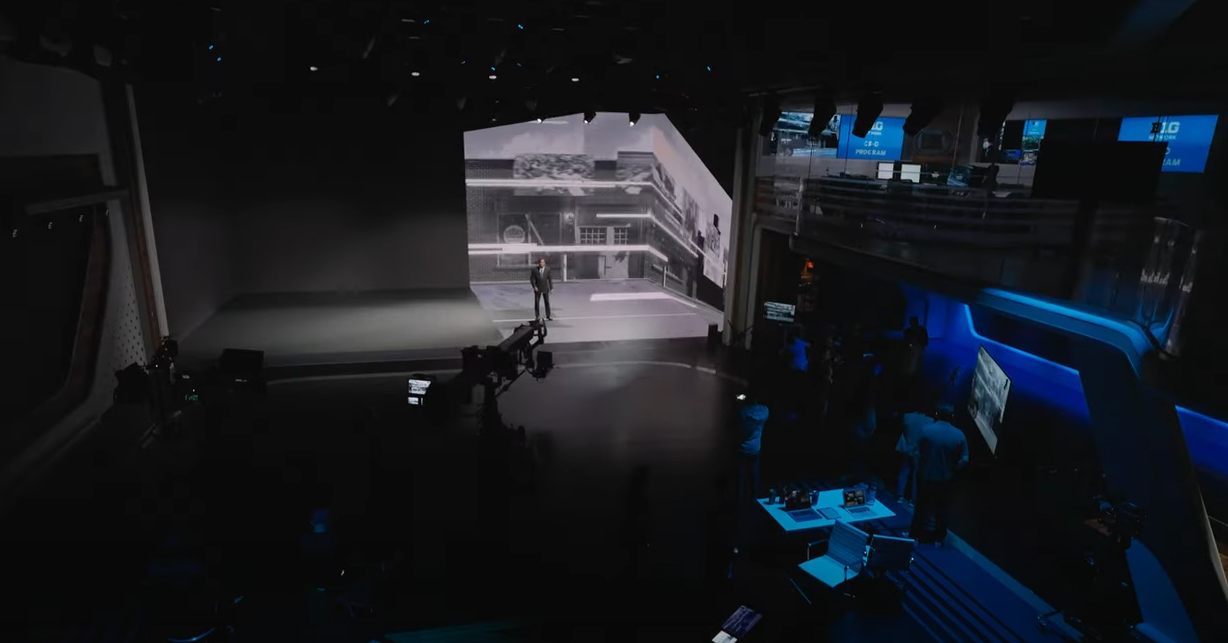

For the new Studio A, Fox opted to implement all of these technologies and design elements on a massive scale – taking many of the lessons and confidence it had gained from implementing earlier applications of extended reality for its NASCAR coverage in a North Carolina broadcast facility that came online a few years ago, which is primarily based on green screens.

For its signature studio in L.A., green screen was quickly eliminated as an option knowing that it was reaching the point where innovation had flattened. Instead, the network opted for an LED volume.

Typically massive stretches of seamless video panels arranged in wraparound walls that can also cover the floor and ceiling, LED volumes are becoming increasingly popular in film and TV production as a way to place talent inside of a space that might have been shot on green screen before. This technique comes with the added advantage of requiring less post-production, having more realistic perspective shifts when the camera moves and also letting talent feel as though they’re in the environment being portrayed.

One application of LED volumes are scenes that involve characters driving in a vehicle. Before, this was often done with a vehicle frame placed in front of a green screen. Post-production would add elements such as passing traffic out the rear window of the vehicle, as well as different views for one-shots of characters with the side windows as backgrounds. Some would even edit in subtle reflections on the windshield or other glass to increase the realism.

Now, even a relatively small LED volume can be used to shoot these types of scenes, with video loops shown in the wraparound LED video wall showcasing the background elements. Some setups even include a ceiling-mounted array that provides real reflections on the glass.

There have also been other types of film and TV scenes shot on volumes, often combined with hard scenic when a character has to interact with the scenery — such as hiding behind a rock or wall or using a prop.

Once the decision had been made to use a volume, Fox’s team wasn’t content to stop there.

Do not adjust your TV set: This is an example of what the LED volume might look like in-studio when GhostFrame is active. It might seem like a random combination of multiple layers, but each camera, such as the one on the left, can only ‘see’ one of the layers thanks to the fact that each is on one of four unique refresh rates.

It blended in technology from GhostFrame which separates the imagery shown on the wall into four refresh rates. Each camera is tuned to a specific rate and is thereby able to ignore the other backgrounds.

The human eye, meanwhile, can’t typically distinguish between refresh rates, which is why we see the odd, layered look. To the talent and anyone standing in the studio, the image is a bit unusual looking — often with repeating versions of the same graphics in different positions. In this case, it’s still visible enough so that talent can be guided to point to the correct area of the volume, for example, giving it another leg up over traditional chroma key surrounds.

“The technological advancements that Fox made this year will influence sports broadcast for years to come,” said Marsh. “Live broadcast is beginning to have the ability to match film-quality productions in a fraction of the time, and this will only continue to improve.”

Combined with Stype Spyder camera tracking monitoring camera positions and the ability to direct cameras to only relay the imagery displayed at a given refresh rate, this gives Fox the capability to show different backgrounds behind different cameras.

While this could, in theory, be used to showcase entirely different environments on each camera, Fox has mostly tied in GhostFrame with the camera tracking and rendering engines to allow each camera angle to capture a different version of the sample environment, but with some subtle shifts to the perspective. This makes the end result feel realistic while not limiting it to looking like it’s simply being shot against three video walls as a theatre set might be.

In this image taken during production of the ‘Fox NFL Sunday’ season kickoff open, talent is shown standing in front of and on the image of a street corner displayed on seamless LED panels. The shot is relatively tight, so it doesn’t fill the entire volume, and portions of it are skewed in order to account for how the buildings would appear to the eye if they were real. (The camera doesn’t capture the empty black parts.)

Feeding perspectively distorted backgrounds on different portions of LED volumes to account for different camera angles has been a common technique, though these typically involve the volume showing a segment of the background adjusted for the viewer’s perspective while other parts remain in their default view. Because of this, there can’t be any overlap between where each camera is pointed, since it would create an odd look.

However, with GhostFrame technology as part of the secret sauce, each camera only sees its assigned refresh rate — which means the director and other crewmembers in the control room can see exactly what that shot will look like when it’s punched up — and not the multi-layer version seen in the studio.

Fox Sports already has multiple options for using the volume, which are typically built around a 3D model of the virtual space that’s rendered and then broken out by GhostFrame as needed. It can be used to create an indoor field of sources, allowing talent to demonstrate plays as well as showcase virtual set extensions with a variety of topical graphics inserted.

Furthermore, plans call for more innovation, which is possible thanks to the dynamic and flexible system Fox and Girraphic engineered.

“I feel like we are just scratching the surface with the LED volume. As we grow, these environments will evolve from being spaces for the talent to more functional interactive environments,” said Field.

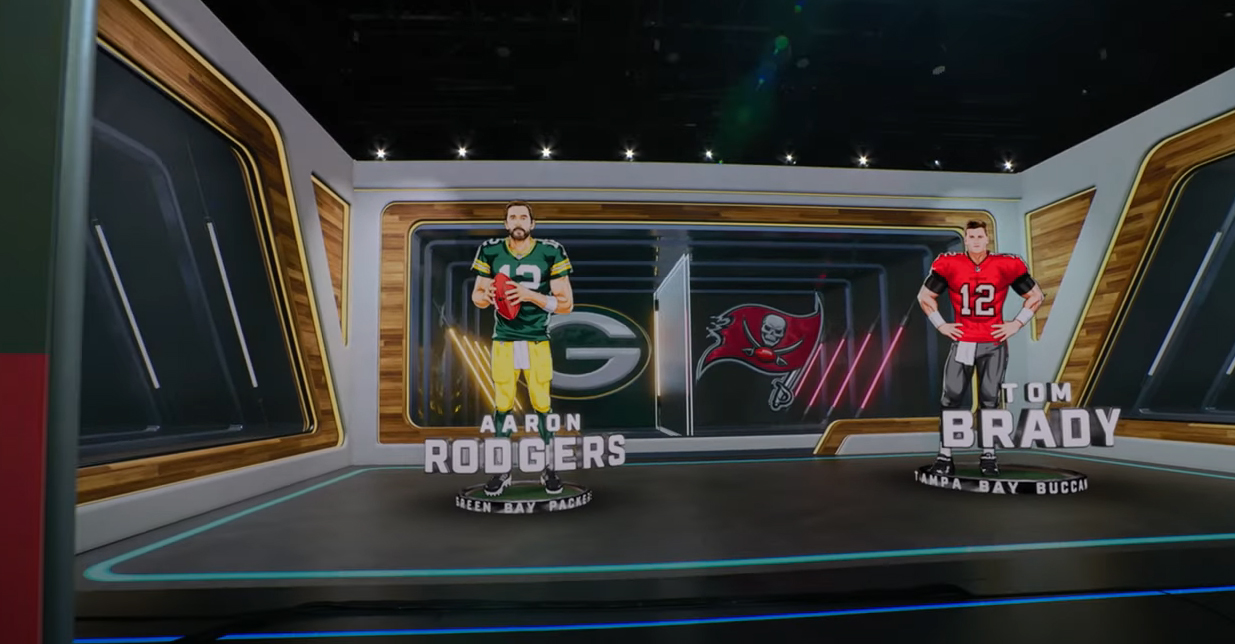

In addition to the volume, Fox can also bring in extended reality elements throughout the space, including both the volume and hard set (or shots that include both at the same time). These are typically more in line with ones that have been widely used in the past, such as floating panels or giant freestanding cutouts showcasing player photos and stats.

These digital effects have their own dedicated Unreal engines.

Fox has control over the obvious things like player photos and video, but they also have control over some unique pieces. For example, in the ‘On the Field’ segment, their operators can change things like the time of day or even the color of the crowd’s jerseys.

“We have control over the obvious things like player photos and video, but also have control over some unique pieces. For example … operators can change things like the time of day or even the color of the jerseys the crowd is wearing,” explained Marsh.

This is accomplished with help from Erizos control integration.

Girraphic reviewed Fox’s current workflows from their other shows and stages and worked with Erizos to recreate some of those on its platform, allowing operators to get hands-on with the new controls with minimal effort.

This required finding a way to make it easy for operators to control 36 real-time engines efficiently.

Inevitably, broadcasters have to plan for contingencies, which could include failure in one or more components of the systems that run the LED volume and rendering. There are spare renderers available that can be brought online if there’s a failure on a primary one.

There’s also the option to shoot around a malfunctioning part of the volume — whether it’s a rendering software or hardware failure. The system can also remove GhostFrame to simplify processes and still shoot against other backgrounds, depending on the segment.

Finally, the network ultimately can fall back on its hard practical set on the opposite end of the studio.

Subscribe to NewscastStudio for the latest news, project case studies and product announcements in broadcast technology, creative design and engineering delivered to your inbox.